Enterprises have been able to achieve new digital heights thanks to the public cloud, which has enabled them to establish dynamic and scalable operations. Different compute options are available for organisations to choose from, depending on their various dynamic and flexible demands. The serverless model is one of them.

Serverless computing is a type of cloud computing execution model that allows businesses to tap into a cloud service provider's (CSP) processing capability, such as Amazon Web Services (AWS). It enables businesses to save even more money on server operations and maintenance, as well as related activities like patch management, scaling, and availability. Serverless computing allows businesses to focus on developing apps and core products rather than maintaining and securing server infrastructure. This means that enterprises that choose to go serverless \benefit from increased flexibility, automation, cost-effectiveness, and agility.

Serverless Architectures

Serverless computing is a type of technology that provides back-end services and allows businesses to delegate certain tasks to cloud service providers like AWS, such as capacity management, patching, and availability. Serverless computing allows businesses to develop back-end apps without having to worry about uptime and scalability. Aside from the relative cost of serverless computing, its design also allows businesses to create and deploy code without having to worry about the underlying infrastructure's administration and security.

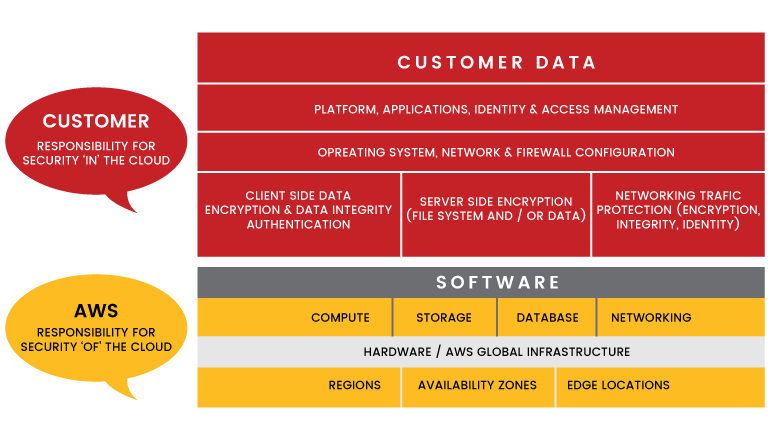

Since the infrastructure computing aspects of serverless technology are managed by the Cloud services themselves — along with the administration of the hardware, operating system, and software — these serverless elements are likewise secured by their security. However, while Cloud solutions have a larger share of the security responsibility in serverless computing, the shared responsibility model also applies to serverless services. For instance, in the case of infrastructure as a service (IaaS) provider, the user is responsible for implementing all security measures to protect the operating system and application software. The user must additionally administer their own firewall rules and policies when exposing any services to the internet. The user is also in charge of his or her application and data.

Vulnerable Coding Practices and Configuration issues

For a stronger security posture, users of the aforementioned services should create policies that follow the least-privilege strategy as a best practice, and assign and verify rights regularly. A complicated combination of services, on the other hand, maybe difficult for consumers to manually address. Code security concerns are also a concern in serverless setups. Because the container host platform is mostly out of scope, teams must concentrate on input, code, and execution security. Enterprises should prioritise static code analysis when establishing a plan to reduce serverless security issues. Some third-party suppliers, such as AWS Lambda, incorporate code scanning into serverless setups.

Several serverless coding vulnerabilities apply to all aspects about where and how the code is executed, resulting in the following critical problems:

Event injection: Injection vulnerabilities can still have an impact on the code and how it processes input. Enhanced input validation and established database layer logic — such as stored procedures — can aid in the resolution of this problem.

Broken authentication: For users of serverless applications, robust authentication and role constraints should be stressed.

Insecure app secret storage: Serverless function execution frequently involves API keys, encryption keys, and other secrets. Developers should be using sophisticated secrets management technologies and cloud-specific key stores, according to security teams.

Improper exception handling: Developers must ensure that mistakes and exceptions are properly handled, avoiding the exposure or disclosure of stack records.

Possible Breaches and Attack Scenarios

AWS makes it easy for customers to set up and configure security measures for serverless services. Malicious hackers, on the other hand, seek methods to exploit frequent user mistakes, misconfigurations, along with one of the serverless model's core benefits — its distributed nature — to carry out their plans.

Theft of Accounts and Credentials

When using secure resources, functions require secrets. When a hostile actor gets access to a serverless system's compromised application, they can steal and exploit secrets holding secure credentials to obtain access to vital resources or gain control of the whole account especially because secrets are transformed to clear text when not being used.

Sensitive Data and Code Theft

When hostile actors use Amazon S3 buckets to host interactive content for a web-based application, it may be easier for them to see sensitive information and crucial code by just searching the site. This may allow them to access sensitive information like login details, as well as command-line tools, to conduct reconnaissance or seek holes to attack.

Privilege escalation

Privilege escalation can occur when administrators do not correctly specify and configure permissions associated with a service. This might enable a hacked low-privileged user to alter a high-privileged user's password. The same is true for incorrectly set role permission policies, which may allow a malicious attacker to establish a new policy version, which would then allow the policy's permissions to be changed. The rogue user might be awarded full administrator access if role permissions are not configured securely.

Persistence

Serverless functions contain corresponding configuration data (name, description, point of entry, and resource needs) and are temporary, which means they have a limited lifespan of a few seconds or minutes. They're also meant to be stateless, allowing you to quickly launch as many replicas of a function as you need to keep up with the number of incoming events. Each function call creates a new instance of the function called “cold start,” and is anticipated to have some delay. To prevent delay, functions must be cached — sometimes known as a "warm start," in which the same function sandbox or container is utilized for many invocations. Warm starts could be used by malicious actors who have previously hacked an AWS Lambda instance to submit requests regularly to keep the instance from being shut off.

Recommendations for Creating Secure Serverless Applications

Some of the recommendations for protecting serverless services are listed below.

(The majority of my recommendations are based on AWS.)

- It is the users, not the CSPs, who are responsible for writing secure code. A thorough code review process is essential for keeping programmes secure from conception through deployment.

- Keeping track of all of the policies and procedures Permissions may be overwhelming in a large-scale project, specifically when it is being implemented done by hand Users may better handle complicated permissions compliance procedures with the help of this tool. They require a tool that compares security best practices and industry standards. In an automated method, meet compliance criteria.

- Most attacks, whether targeted or not, begin with a reconnaissance phase. This is why minimising the number of footprints left by the services being utilised is a smart security precaution. Users of Amazon API Gateway, for example, can utilise a native or third-party load balancer, a content delivery network (CDN), or a proxy instead of installing apps that directly expose Amazon API Gateway endpoints.